How we cut 10 minutes off our deploy time by using storage buckets

We moved our SPA to a storage bucket. Here we detail how it went.

At Nested one of our applications is an internal tool. We decided long ago to follow the single-page app 100% client-side route, and functionally that has served us very well.

We make use of the Google Cloud Platform. It offers quality and variety of services for sensible money.

The plan with the SPA deployment was to use GAE (Google App Engine) as its the service intended for deploying applications.

We also use CircleCI to run our PR and “merge to master” workflows.

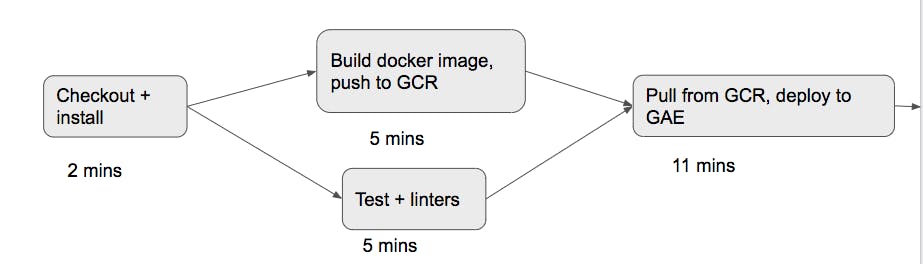

The flow for our merge to master looked like the below:

For those unfamiliar GCR is Google Cloud Registry and is a place for storing and pulling docker images. We actually build our JavaScript bundle on the image and push that to GCR.

Most of the workflow was pretty efficient (running in parallel) except for deploying to GAE. This was taking 11 minutes in itself. The result of which was:

- A merge to live time of 17 minutes

- A complete deploy time of 26 minutes

As all the processing is done on the browser we had the idea to run the application from a bucket on GCS (Google Cloud Storage). The idea being to remove the time-heavy GAE deployment step.

The results

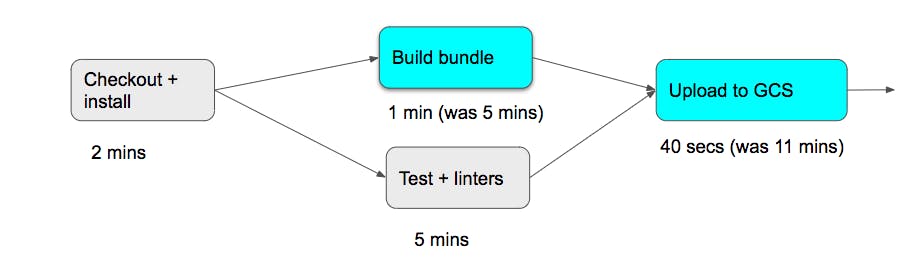

The change itself was an update to our CircleCI config file and it resulted in the below flow:

As you can see the entire dependency on GAE and GCR has now been removed. Rather than using GCR we use the CircleCI workspace. By persisting and re-attaching it we are able to build the bundle in parallel to running tests and linter, and then upload it to our bucket on success.

The overall saving is quite staggering:

- Merge to live time down from 17 minutes to 8 minutes. A saving of almost 10 minutes

- Complete deploy time down from 26 minutes to 14 minutes. This allows developers to move on to the next task faster.

- It has cut ~$60/month off our bill.

Any issues?

We had a number of concerns which we had to settle before we could make the change with confidence, here are those concerns and our answer to them.

1. Will there be a difference in NodeJS’s dependency cache?

The image was always installing dependencies fresh whereas CircleCI has a cache. However we noted that CircleCI cache is busted with the lockfile hash (and CircleCI itself runs on Docker), so basically if anything has changed in your lock everything is downloaded fresh. Problem solved.

2. Will environment variables all work the same?

Ensuring that all the variables found in the Dockerfile were moved into the Circle config was crucial. Without this we would not be able to serve an optimised bundle and would have wound up serving a 8mb JS file rather than 300kb.

3. Can we deploy without an outage?

Having an outage would be a disaster for our internal operations team so it was crucial we made these architecture changes without incurring an outage cost. Thankfully the index file and the JS asset it points to are built together, so if someone is using the buckets index file, they are therefore using the latest JS asset.

4. What about Asset caching?

As we were constantly pushing new assets to the bucket we need to ensure the index file is not cached on the users browser, otherwise the user would pull an old asset.

Thankfully GCS allows you to set meta headers when you upload. Allowing us to give the JS asset a long cache life (as it has a hash in its name) and set the index as "no-cache", so the latest will always be fetched.

5. What is the difference in rolling back?

This one is slightly controversial. With GAE it keeps a record of stopped instances so you can easily start up an old instance relatively quickly. However with our new setup we have 2 choices

Manual fix; update the index in the bucket to point to an old asset. This would likely only take less than a minute.

Automated fix; re-run CircleCI deploy job for a previous (and successful) commit, so the hash and index are updated. This would take a minimum of 8 minutes.

We were happy to take the slight hit as we do not experience outages often.

Overall

We are really pleased we made the decision to go ahead and use a storage bucket over GAE. As you can see it is a simpler architecture (with less moving parts) which makes it easier to manage, as well as more time efficient.

It has been running successfully for a month now so we recommend anyone with a similar application move it to using a bucket.